Are AI Tools Like GPT-4 Making Us Overconfident?

Examining the Dunning-Kruger effect in the age of large language models Discover actionable insights and real-world examples.

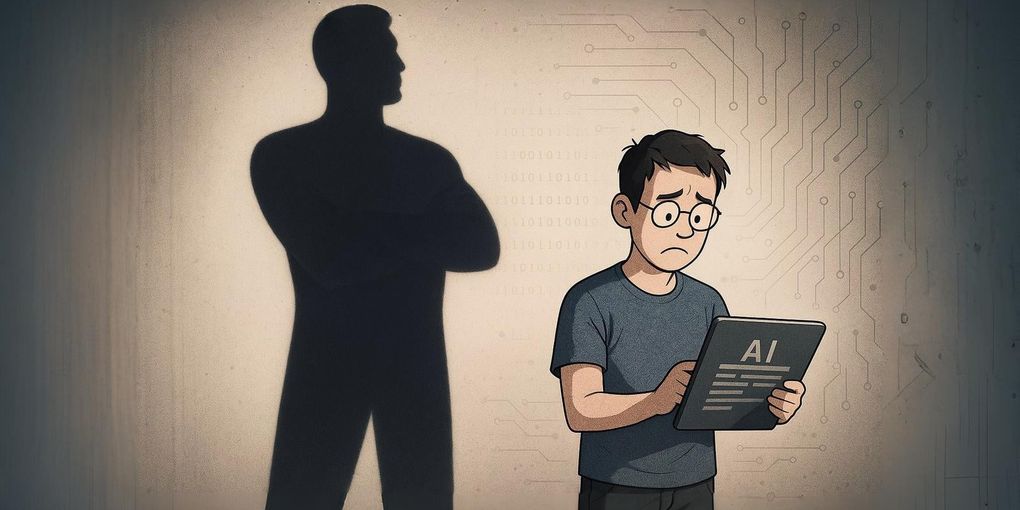

Large language models (LLMs) like GPT-4 have transformed how we get information. They generate fluent responses, answer tough questions, and mimic human conversation with ease. But there’s a growing concern: these tools might be feeding the Dunning-Kruger effect—the tendency for people with low knowledge to overestimate their expertise.

What Is the Dunning-Kruger Effect?

Coined by psychologists David Dunning and Justin Kruger in 1999, the Dunning-Kruger effect describes a mental blind spot. People who know little about a subject often lack the self-awareness to recognize their own ignorance. The less they know, the more confident they may feel.

How AI Makes the Problem Worse

-

Instant answers create false confidence: LLMs offer slick, immediate responses. But just because the answer sounds right doesn’t mean the user understands the topic. The speed and polish can trick people into thinking they “get it”—when they really don’t.

-

Professional tone masks superficiality: AI-generated content often comes across as articulate and authoritative. That style can hide the fact that the substance may be shallow or even wrong. This veneer of credibility leads users to overrate their own grasp of complex issues.

-

Reliance replaces real learning: Why read a textbook when GPT can explain it in two seconds? That mindset can erode deep learning habits. As users lean more on AI, they may lose the drive to study thoroughly or think critically.

-

Feedback loops reinforce bias: LLMs respond based on user prompts. If the input has bias, the output often does too—without offering a counterpoint. This can trap users in echo chambers, where bad ideas get repeated and reinforced.

Why This Matters

In the workplace: Superficial knowledge can lead to overconfidence—and bad decisions. In high-stakes fields like healthcare, law, or engineering, that can have serious consequences. To fight this, organizations need cultures where asking tough questions and seeking expert input is encouraged.

In education: Students might skip the hard work and go straight to AI for answers. That undermines critical thinking and problem-solving—skills they’ll need when the AI can’t help.

In public discourse: People armed with AI soundbites may argue with misplaced confidence. This can fuel division and reduce conversations to battles of bravado, not thoughtful debate.

How to Push Back

-

Teach critical thinking: Schools and companies should train people to question what they read—including AI responses. Understanding should matter more than just “knowing stuff.”

-

Use AI as a supplement, not a substitute: LLMs work best when paired with real learning. They’re tools—not replacements for expert guidance or deep study.

-

Build transparency into AI: Future models should clearly signal what they don’t know or when their answers are based on limited data. That kind of honesty can keep users grounded.

Bottom Line

AI can be a powerful ally. But if we’re not careful, it can also boost our confidence while hollowing out our competence. The key is using it wisely—with humility, skepticism, and a commitment to real understanding.

What’s your take? Let’s talk—drop your thoughts in the comments.